After-hours trading on Wednesday saw Nvidia stock soar close to a $1 trillion market cap after the company reported a startlingly optimistic outlook and CEO Jensen Huang predicted the company would have a “giant record year.”

Nvidia races toward $1 trillion because of AI chip

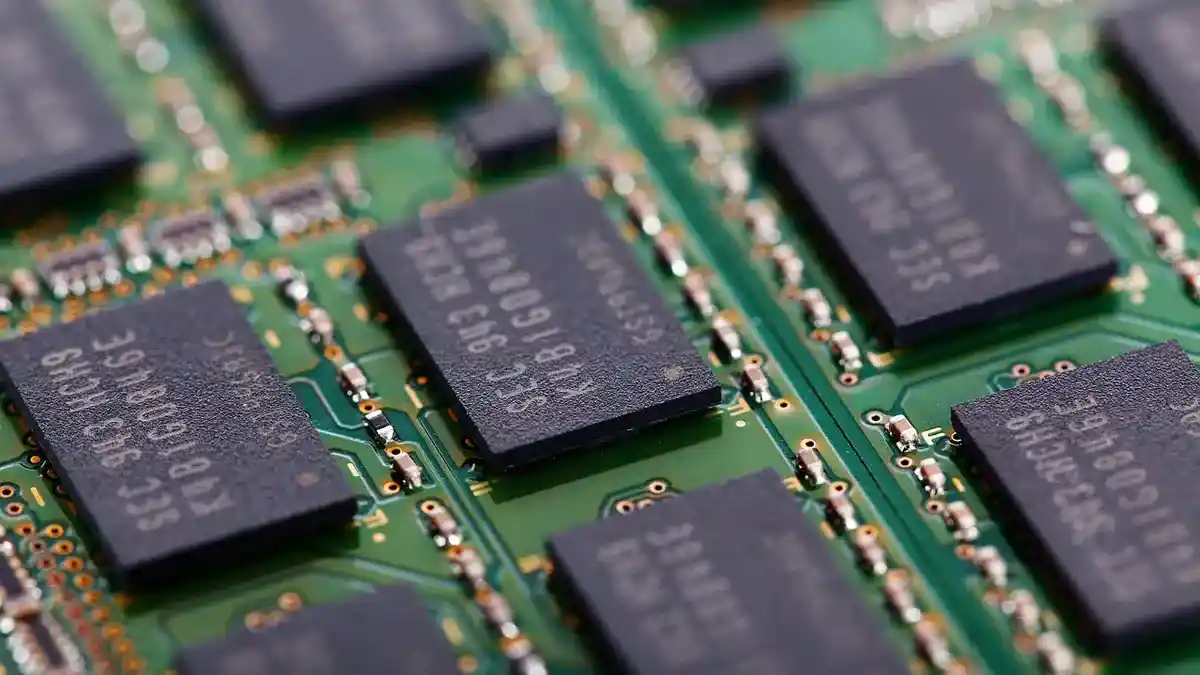

Nvidia’s graphics processors (GPUs), which power AI applications at Google, Microsoft, and OpenAI, are in high demand, which has increased sales.

Nvidia exceeded analyst estimates of $7.15 billion by guiding to $11 billion in sales for the current quarter due to the demand for AI chips in data centers.

Huang stated in an interview with CNBC that “generative AI was the flashpoint.”. Accelerated computing is the way forward, CPU scaling has slowed, and then the game-changing application appeared.”

Nvidia believes it is riding a notable shift in how computers are constructed that may lead to even greater growth; according to Huang, the market for data center components may even reach $1 trillion.

The central processor, also known as the CPU, has traditionally been the most crucial component of a computer or server. Intel has dominated this market, with AMD serving as its main competitor.

The graphics processor (GPU) is taking center stage with the arrival of AI applications that demand a lot of processing power, and the most advanced systems are using as many as eight GPUs to one CPU. In terms of AI GPU sales, Nvidia is currently in the lead.

Huang stated that the “data center of the future will be generative data,” as opposed to the “data center of the past, which was largely CPUs for file retrieval.”

So instead of having millions of CPUs, you’ll have significantly fewer CPUs, but they’ll be linked to millions of GPUs, Huang explained.

For example, Nvidia’s current DGX systems, which are effectively eight of the company’s top-of-the-line H100 GPUs and two top-of-the-line CPUs in a single box for AI training, utilize only these two components.

With one top-of-the-line Intel Xeon processor, Google’s A3 supercomputer pairs eight H100 GPUs.

Because of this, Nvidia’s data center business grew 14% during the first quarter of the year compared to AMD’s data center unit’s flat growth and Intel’s AI and Data Center business unit’s decline of 39%.

Read Also: The Voice Season 23 Finale

Additionally, Nvidia GPUs frequently cost more than many central processors. The list price of the most recent Xeon CPU generation from Intel can reach $17,000. On the secondary market, a single Nvidia H100 can fetch $40,000.

As demand for AI chips increases, Nvidia will encounter more competition. Both AMD and Intel have competitive GPU businesses, particularly in the gaming industry. Startups are developing new types of chips specifically for AI, and mobile-focused corporations like Qualcomm and Apple continue to advance the technology in hopes that it will one day be able to run in your pocket rather than in a massive server farm. Amazon and Google are developing custom AI chips.

However, Nvidia’s top-tier GPUs continue to be the GPU of choice for current businesses developing applications like ChatGPT, which are expensive to train by processing terabytes of data and are costly to execute later in a procedure known as “inference,” which employs the model to produce text, graphics, or forecasts.

Because of the proprietary software that makes it simple to use all of the GPU hardware features for AI applications, analysts claim that Nvidia is still the market leader for AI chips.

Huang claimed on Wednesday that it would be difficult to duplicate the company’s software.

On a conference call with analysts, Huang stated that “you have to engineer all of the software, all of the libraries, and all of the algorithms, integrate them into and optimize the frameworks, and Optimize it for a data center’s overall design, not simply the architecture of a single chip.”

Chip stocks jump (Video) Watch Now